Serverless applications in Kubernetes with WebAssembly

Kubernetes is the widest deployed orchestrator today. Many organizations and users rely on it to deploy and manage all kind of workloads, from ephemeral job runners for continuous integration tasks to business critical applications.

Though traditionally containers are the way to deploy applications in Kubernetes this is not the only option. Kubernetes allows you to extend its capabilities following different approaches, such as adding new API's and runtimes. For example, KubeVirt enables Kubernetes to run containers and virtual machines together.

Here is where WebAssembly (Wasm) meets Kubernetes. If you are not familiar with this technology, think of WebAssembly modules as a lightweight version of containers. These modules run on top of a Wasm Virtual Machine or runtime which provides a sandboxed environment where the application will run. This sandbox is often enough to run entire applications without requiring more abstraction layers.

Keep reading to learn how you can deploy WebAssembly-based serverless applications in your Kubernetes clusters.

Wasm Workers Server

Wasm Workers Server (WWS) is an OSS project that allows developing and running serverless applications. These applications are composed of individual units called workers or functions. Thanks to WebAssembly, you can develop these workers in different languages like JavaScript, Rust, Python, Ruby and Go.

WWS provides different features to your application such as static asset management, configuration files, Key/Value storage and more. You only need to focus on the code and Wasm Workers Server will provide you with the rest.

To try it out, install the latest version and run an example:

$ curl -fsSL https://workers.wasmlabs.dev/install | bash && \

wws https://github.com/vmware-labs/wasm-workers-server.git \

--install-runtimes --git-folder "examples/js-basic"containerd

containerd is an open and reliable container runtime. It is also very configurable and extensible.

One of the many ways in which it can be extended is with runwasi.

runwasi

runwasi is a library to integrate with containerd and its container lifecycle. It plugs into the calls that containerd receives to create, edit, or delete a sandbox. It focuses on WebAssembly runtimes by providing all the utilities and hooks to run applications based on Wasm modules.

In general, it simplifies how you connect your Wasm runtime to the container lifecycle calls. The final binary is called "containerd shim" and it's able to interact with any containerd installation. This ranges from a user running the ctr CLI tool to the Kubernetes kubelet making calls through the CRI plugin interface.

The runwasi repository contains two Wasm runtimes: wasmtime and WasmEdge. However, we might be interested in running our WebAssembly modules in runtimes that have specific or different functionality baked in.

Fear not, the deislabs team at Microsoft has us covered with the containerd-wasm-shims project. But more on that later.

Before diving into the details, let's try it out!

Deploy your first Wasm-based serverless app in Kubernetes

If you prefer to run this example in your own machine you will need some tools:

We are going to use Kind to create a new containerized cluster for experimentation. We will then deploy KWasm's operator that enables nodes to run WebAssembly workloads, which will in turn deploy Wasm Workers Server (wws), that we will use to run our WebAssembly example application.

First, create a cluster with kind:

$ kind create clusterAfter the cluster has been initialized, add the KWasm Helm repository:

$ helm repo add kwasm http://kwasm.sh/kwasm-operator/Now, install KWasm:

$ helm install -n kwasm \

--create-namespace \

kwasm-operator \

kwasm/kwasm-operatorGiven that KWasm is a project for experimentation, it requires us to annotate the nodes that we want to instrument. Given how we created our cluster with the default kind configuration, we only have one node, the control plane:

$ kubectl annotate node kind-control-plane kwasm.sh/kwasm-node=trueThe next step is to create the Kubernetes RuntimeClass. A RuntimeClass is very handy for scheduling workloads at runtimes that meet specific requirements: speed, security; you name it. Runtime Classes have a very direct relationship with the Container Runtime Interface (CRI) and the container runtime that is responsible for managing the container sandbox set up upon node pod creation.

The new wws handler instructs containerd to use the wws shim when deploying new workloads on the node:

$ kubectl apply -f - <<EOF

apiVersion: node.k8s.io/v1

kind: RuntimeClass

metadata:

name: wws

handler: wws

EOFAt this point, all prerequisites are met. You can create a new regular Kubernetes deployment that runs a wws application:

$ kubectl apply -f - <<EOF

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: wasm-wws

spec:

replicas: 1

selector:

matchLabels:

app: wasm-wws

template:

metadata:

labels:

app: wasm-wws

spec:

runtimeClassName: wws

nodeSelector:

kwasm.sh/kwasm-provisioned: kind-control-plane

containers:

- name: wws-hello

image: ghcr.io/deislabs/containerd-wasm-shims/examples/wws-js-hello:v0.7.0

command: ["/"]

resources: # limit the resources to 128Mi of memory and 100m of CPU

limits:

cpu: 100m

memory: 128Mi

requests:

cpu: 100m

memory: 128Mi

---

apiVersion: v1

kind: Service

metadata:

name: wasm-wws

spec:

ports:

- protocol: TCP

port: 80

targetPort: 3000

selector:

app: wasm-wws

EOFNow, Wasm Workers Server is serving a JavaScript function within the quickjs JavaScript runtime, compiled to WebAssembly.

The last step is to try it!

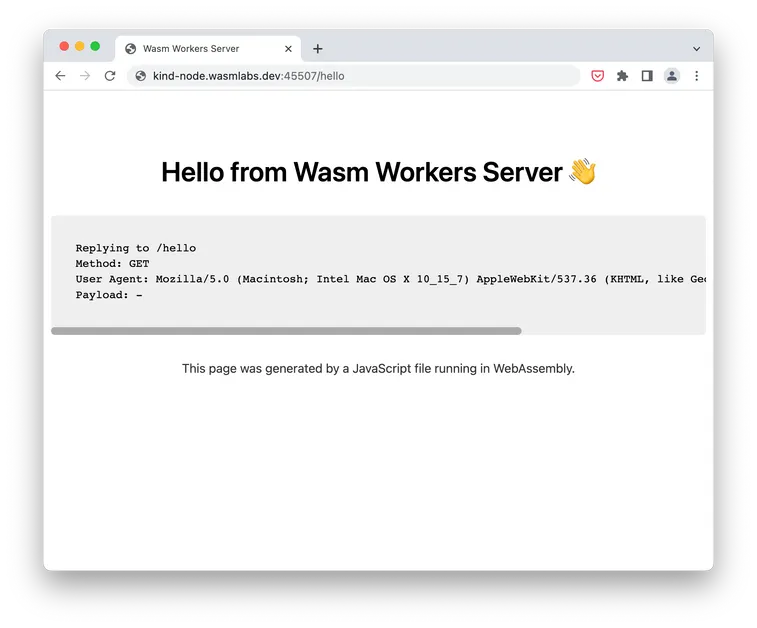

$ kubectl port-forward --address=0.0.0.0 svc/wasm-wws :80

Forwarding from 0.0.0.0:45507 -> 3000This will perform the port forwarding to the service in the foreground. With --address we are instructing the port-forward command to listen on all interfaces. Visit it:

That's it! Wasm Workers Server (wws) just served a request on top of Kubernetes thanks to WebAssembly, containerd, runwasi and KWasm!

Now, how do all the pieces fit together?

How does it work?

When setting up a container sandbox, containerd allows you to plug into this process and create something else instead: a WebAssembly sandbox.

This is where runwasi, as we have seen before, comes into play. It implements the containerd container runtime shim, and it integrates two WebAssembly runtimes as of today: wasmtime and WasmEdge. However, we might be interested in having extra functionality on top of a WebAssembly runtime.

Enter containerd-wasm-shims.

containerd-wasm-shims

This project allows you to run WebAssembly modules in more WebAssembly runtimes than the ones provided by the runwasi project. These runtimes might provide us with different capabilities.

Every runtime comes with different features. For example, Wasm Workers Server scans the working directory for WebAssembly modules, exposes them in a specific route, and starts an HTTP server to accommodate user requests.

The containerd-wasm-shims project collects different Wasm runtimes that integrate with containerd using runwasi as a library. Currently, it features SpiderLightning, Spin and Wasm Workers Server (wws) runtimes.

containerd-shim-wws

Now we have put some pieces of the puzzle together: containerd, runwasi and containerd-wasm-shims.

Having Wasm Workers Server (wws) written in Rust has been very convenient to integrate it with a new shim for containerd-wasm-shims: containerd-shim-wws.

The containerd-shim-wws shim starts the core logic of Wasm Workers Server. When you deploy a new application, it pulls the source from an OCI image and extracts your code. Then, it explores the current working directory looking for functions and serves them at the corresponding route. Now containerd has a developer-friendly serverless platform at its fingertips.

We are delighted to announce that the containerd-shim-wws shim is available on containerd-wasm-shims as of version 0.7.0! 🚀

KWasm

KWasm is an open source project developed by the Liquid Reply team, with the goal of simplifying the integration of WebAssembly with Kubernetes. At its core is the KWasm operator, which installs existing WebAssembly runtimes on Kubernetes nodes to accelerate experimentation and development.

The KWasm operator comes with a Helm chart, which makes it easy to deploy. The node setup phase is scripted in a node-installer container image, which packages the containerd-wasm-shims project, among other WebAssembly runtimes.

Conclusion

In this article we have learnt about the Wasm Workers Server project, its main goals, and how it can help you as a developer.

We then introduced the runwasi project. We went through an interactive demostration of how to execute JavaScript within a WebAsembly sandbox on top of Kubernetes that showcases all concepts in this article.

After the demo, we dug a bit into the details on how this example works, and what are the components involved. We did describe the containerd-wasm-shims, containerd-shim-wws and KWasm projects, and how they fit together.

As you can see, there are ways in which we can run WebAssembly workloads on top of Kubernetes in a straightforward way. Although many of the projects used in this example are not production-ready (yet), it's a very interesting space that can help us imagine how to integrate workflows with different needs and requirements in our own toolchain and infrastructure.

We hope you liked it! Try Wasm Workers Server, star it, and let us know what you think. We welcome your contributions too!

Follow us on Twitter, and keep the conversation going! See you soon! 👋

Acknowledgements

We would like to thank David Justice and Jiaxiao Zhou from the Microsoft team for their help. We would also like to thank Sven Pfennig from the Liquid Reply team for his work on KWasm and his review of this article.