AI-powered workers in Wasm Workers Server

The interest on Artificial Intelligence (AI) has grown exponentially during 2023. Projects, models, and examples are released every day. A year ago, everyone got excited about AI. Today everyone wants to use it.

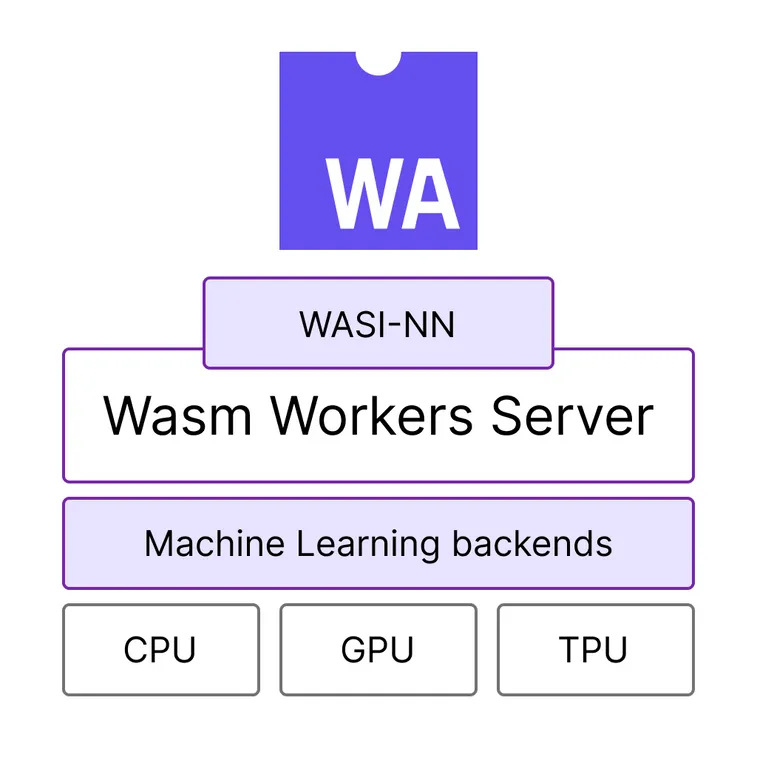

At Wasm Labs, we want you to start developing AI-powered applications. Today, we are happy to announce AI workloads in Wasm Workers Server thanks to WASI-NN! Available from v1.5, your workers can run inference using different Machine Learning (ML) models.

Let’s try it!

Your first AI-powered worker

Prerequisites

Before running the Wasm Workers Server AI demo, you need to install an inference runtime or backend on your environment. Currently, Wasm Workers Server only supports OpenVINO. However, there is work ongoing to support new backends like KServe or Tensorflow Lite in the future.

To install OpenVINO, you can follow any of these guides:

Make sure you configure your current environment to detect your OpenVINO installation:

-

On MacOS and Linux:

source $YOUR_OPENVINO_INSTALLATION/setupvars.sh -

On Windows:

C:\Program Files (x86)\Intel\openvino_2023\setupvars.bat

Image classification worker

The Wasm Workers Server repository includes an AI demo for image classification. To run it, follow these steps:

-

Update Wasm Workers Server to the latest version

curl -fsSL https://workers.wasmlabs.dev/install | bash -

Clone the Wasm Workers Server repo:

git clone https://github.com/vmware-labs/wasm-workers-server.git && \

cd ./wasm-workers-server/examples/rust-wasi-nn -

Run the

prepare.shscript. It will download a MobileNet model from the OpenVINO team../prepare.sh -

Run the project:

wws . -

Access the demo on http://localhost:8080

-

Upload different images to test it.

That’s all! You already run your first AI-powered application with Wasm Workers Server.

How does it work?

Wasm Workers Server relies on the WASI-NN proposal to run a Neural Network (NN) from your workers. This proposal defines a set of agnostic methods to:

- Load a ML model.

- Set the input data.

- Run inference.

- Retrieve the output.

The WASI-NN implementation on the host side completes all these operations using the configured ML backend. In this case, Wasm Workers Server uses Wasmtime's wasi-nn crate. It means that the inference process runs on the host side, taking advantage of hardware accelerators like GPUs and TPUs.

You can find all the WASI-NN details in the spec proposal and the related WebAssembly Interface Types (WIT) file. If you are curious about the Wasm Workers Server’s implementation, you can check the related pull request.

Configuration

Like with any other feature, workers do not have access to the WASI-NN bindings by default. You need to enable and configure this feature in every worker that uses it.

Only a few workers in your application may require AI capabilities. For this reason, Wasm Workers Server follows a capability-based approach, giving the minimum permissions to every worker.

In this case, you only need to create worker configuration file (a TOML file with the same the worker filename) and enable the wasi-nn feature. In the following example, we mount a folder with different ML models and configure the wasi-nn feature:

name = "wasi-nn"

version = "1"

[vars]

MODEL = "model"

[features]

[features.wasi_nn]

allowed_backends = ["openvino"]

[[folders]]

from = "./_models"

to = "/tmp/model"AI and WebAssembly

This is just the beginning of AI support in Wasm Workers Server. The entire ecosystem is growing thanks to the work of many amazing developers like you. Wasm Workers Server allows you to start coding AI-powered applications easily.

However, there are still some major limitations:

- All major Wasm runtimes use 32-bit addresses. This limits the ML models you can embed in a Wasm module to 4Gb.

- The current tooling is not available in Wasm. Most libraries are available in Python and they require to build native extensions. This is not a straightforward process.

Fortunately, the Wasm community is pushing to overcome these limitations!

Componetize-py

Joel Dice from Fermyon recently announced a new version of the componentize-py project. It allows you to create Wasm Components written in Python that use libraries with native extensions.

Named models

On the WASI-NN side, the proposal is evolving with new features like Named models. This new feature allows a Wasm runtime to preload ML models at the host, so the module can refer to them by name. Special mention to Andrew Brown from Intel and Matthew Tamayo-Rios from Methodic Labs for their work.

AI everywhere!

The goal of Wasm Workers Server is to simplify the way you develop and deploy your serverless applications anywhere. From v1.5, you can develop and deploy AI-powered applications, expanding the possibilities and ideas you can build with Wasm Workers Server.

The entire AI ecosystem is moving fast. New models like Meta LlaMa2 reduced the hardware requirements for inferencing, making it possible to run models based on this architecture in many different environments. All these new models represent a tremendous opportunity for WebAssembly.

We believe that the combination of AI + WebAssembly will push your applications to places you couldn't think before.